A new report details a dystopian future for humans, as we have created a technology that will soon create an unreality that will be difficult for our cognitive abilities to discern from reality. Titled “The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation,” the report was authored by 26 experts from 14 institutions, including Oxford University’s Future of Humanity Institute, Cambridge University’s Centre for the Study of Existential Risk, Elon Musk’s OpenAI, and the Electronic Frontier Foundation. The spooky part? They only looked at the near-future. This isn’t some Jetsons-style society that our grandchildren will have to deal with, but an evolving threat that everyone will soon be fighting back against. Per the report:

For the purposes of this report, we only consider AI technologies that are currently available (at least as initial research and development demonstrations) or are plausible in the next 5 years, and focus in particular on technologies leveraging machine learning.

The report is far more comprehensive than this list suggests, but here are 10 examples why we should truly fear artificial intelligence.

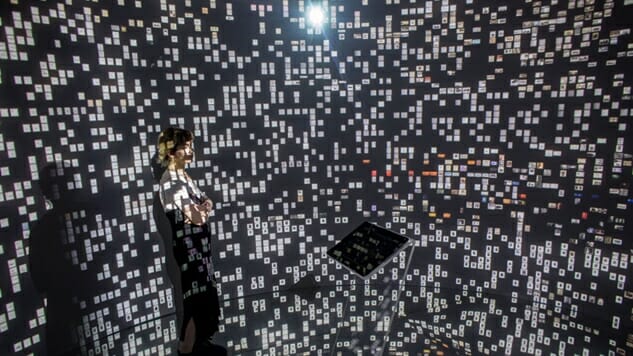

1. AI Can Produce Images That Are Indistinguishable from Photos

Figure 1 illustrates this trend in the case of image recognition, where over the past half-decade the performance of the best AI systems has improved from correctly categorizing around 70% of images to near perfect categorization (98%), better than the human benchmark of 95% accuracy. Even more striking is the case of image generation. As Figure 2 shows, AI systems can now produce synthetic images that are nearly indistinguishable from photographs, whereas only a few years ago the images they produced were crude and obviously unrealistic.

2. Humans Have Not Reached the Pinnacle of Performance

For many other tasks, whether benign or potentially harmful, there appears to be no principled reason why currently observed human-level performance is the highest level of performance achievable, even in domains where peak performance has been stable throughout recent history, though as mentioned above some domains are likely to see much faster progress than others.

3. Assassinations Will Be Easier to Carry Out and Conceal Who’s Responsible

AI systems can increase anonymity and psychological distance. Many tasks involve communicating with other people, observing or being observed by them, making decisions that respond to their behavior, or being physically present with them. By allowing such tasks to be automated, AI systems can allow the actors who would otherwise be performing the tasks to retain their anonymity and experience a greater degree of psychological distance from the people they impact. For example, someone who uses an autonomous weapons system to carry out an assassination, rather than using a handgun, avoids both the need to be present at the scene and the need to look at their victim.

4. Attacks That We’re Already Familiar With, Like Phishing Scams, Will Become More Powerful and Prevalent

For many familiar attacks, we expect progress in AI to expand the set of actors who are capable of carrying out the attack, the rate at which these actors can carry it out, and the set of plausible targets. This claim follows from the efficiency, scalability, and ease of diffusion of AI systems. In particular, the diffusion of efficient AI systems can increase the number of actors who can afford to carry out particular attacks. If the relevant AI systems are also scalable, then even actors who already possess the resources to carry out these attacks may gain the ability to carry them out at a much higher rate. Finally, as a result of these two developments, it may become worthwhile to attack targets that it otherwise would not make sense to attack from the standpoint of prioritization or cost-benefit analysis.

5. It Will Become Far Easier to Impersonate Anyone’s Voice

For example, most people are not capable of mimicking others’ voices realistically or manually creating audio files that resemble recordings of human speech. However, there has recently been significant progress in developing speech synthesis systems that learn to imitate individuals’ voices (a technology that’s already being commercialized). There is no obvious reason why the outputs of these systems could not become indistinguishable from genuine recordings, in the absence of specially designed authentication measures. Such systems would in turn open up new methods of spreading disinformation and impersonating others.

6. It Will Be Much Easier to Take Control Over Autonomous Systems like Cars or Weapons

For example, the use of self-driving cars creates an opportunity for attacks that cause crashes by presenting the cars with adversarial examples. An image of a stop sign with a few pixels changed in specific ways, which humans would easily recognize as still being an image of a stop sign, might nevertheless be misclassified as something else entirely by an AI system. If multiple robots are controlled by a single AI system run on a centralized server, or if multiple robots are controlled by identical AI systems and presented with the same stimuli, then a single attack could also produce simultaneous failures on an otherwise implausible scale. A worst-case scenario in this category might be an attack on a server used to direct autonomous weapon systems, which could lead to large-scale friendly fire or civilian targeting.

7. Attacks on AI Itself Will Become More Prevalent

Finally, we should expect attacks that exploit the vulnerabilities of AI systems to become more typical. This prediction follows directly from the unresolved vulnerabilities of AI systems and the likelihood that AI systems will become increasingly pervasive.

This is where the paper moves from predictions to projections, so everything below is less certain than everything above. Any affirmative language is simply the researchers describing a theoretical scenario, not saying that this will happen.

8. AI Could Impersonate a Range of Trusted Parts of Your Life

Victims’ online information is used to automatically generate custom malicious websites/emails/links they would be likely to click on, sent from addresses that impersonate their real contacts, using a writing style that mimics those contacts. As AI develops further, convincing chatbots may elicit human trust by engaging people in longer dialogues, and perhaps eventually masquerade visually as another person in a video chat.

9. AI Could Make it Much Easier for Anyone to Execute a Highly Skilled Kinetic Attack

AI-enabled automation of high-skill capabilities — such as self-aiming, long-range sniper rifles — reduce the expertise required to execute certain kinds of attack.

Human-machine teaming using autonomous systems increase the amount of damage that individuals or small groups can do: e.g. one person launching an attack with many weaponized autonomous drones.

10. AI Could Dramatically Empower Attacks On Democracy Similar to Russia’s Influence on Our 2016 Election

State surveillance powers of nations are extended by automating image and audio processing, permitting the collection, processing, and exploitation of intelligence information at massive scales for myriad purposes, including the suppression of debate.

Highly realistic videos are made of state leaders seeming to make inflammatory comments they never actually made.

Individuals are targeted in swing districts with personalised messages in order to affect their voting behavior.

AI-enabled analysis of social networks are leveraged to identify key influencers, who can then be approached with (malicious) offers or targeted with disinformation.

Bot-driven, large-scale information-generation attacks are leveraged to swamp information channels with noise (false or merely distracting information), making it more difficult to acquire real information.

Media platforms’ content curation algorithms are used to drive users towards or away from certain content in ways to manipulate user behavior.

Jacob Weindling is a staff writer for Paste politics. Follow him on Twitter at @Jakeweindling.